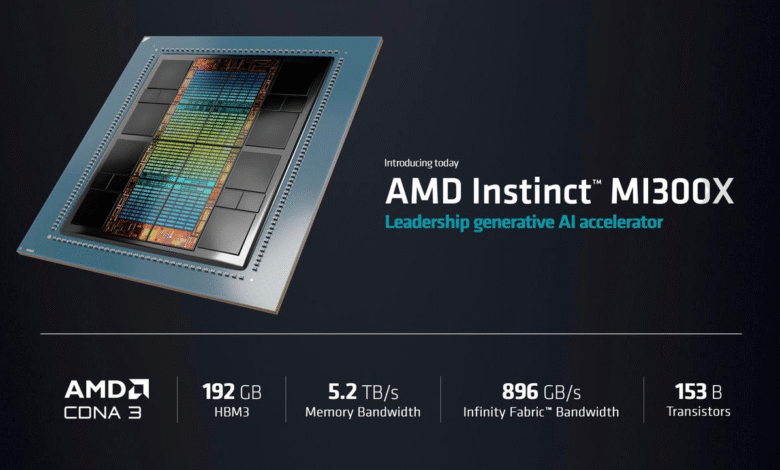

AMD AI Chips: Unveiling the Next Generation by 2024

AMD AI chips are poised to revolutionize the landscape of artificial intelligence hardware, spearheaded by the newly unveiled Instinct MI400 series. With the backing of a strategic partnership with OpenAI, these advanced chips are engineered to meet the demands of hyperscale computing, catering to customers ready to scale their AI operations across vast data centers. During a highly anticipated launch event in San Jose, AMD CEO Lisa Su detailed the capabilities of the MI400 series, emphasizing its potential to streamline performance and outperform competitors, particularly Nvidia. As the race for AI supremacy heats up, AMD’s innovative approach is anticipated to set a new benchmark in the industry. Equipped with these state-of-the-art AI chips, enterprises can expect to achieve unprecedented efficiencies and capabilities in their computational tasks.

In the rapidly evolving sphere of AI technology, the latest offerings from AMD, particularly its next-generation processors, promise to enhance the capabilities of machine learning applications and large-scale data processing. The Instinct MI400 – a cutting-edge solution tailored for organizations pursuing comprehensive AI functionalities – represents a leap forward in design and power efficiency. Collaborating closely with leaders like OpenAI, AMD is positioning itself at the forefront of AI developments, ensuring compatibility with the large-scale infrastructure needed for effective hyperscale computing. As the competition with rival firms, such as Nvidia, intensifies, AMD’s focus on delivering high-performance, cost-effective AI hardware is likely to resonate with major enterprises eager to optimize their data handling and processing strategies. The introduction of these chips signifies an exciting phase in AI hardware innovation, offering promising solutions for the challenges faced by today’s technologists.

AMD AI Chips Revolutionizing Hyperscale Computing

The launch of AMD’s Instinct MI400 series marks a pivotal moment in the evolution of AI hardware, particularly for hyperscale computing. These advanced chips are engineered specifically for large-scale AI computations, allowing data centers to optimize their performance vastly. By integrating multiple MI400 chips into a unified rack-scale system, businesses can achieve remarkable efficiencies and scalability, which is essential for meeting today’s data demands.

AMD’s strategy positions it as a formidable contender against existing competitors in the market, notably Nvidia. With the MI400 series designed to support thousands of interconnected chips, AMD aims to transform traditional data processing frameworks into highly responsive systems. The expectations are set high, as this technology is geared towards facilitating seamless AI operations across expansive data networks.

Collaborative Innovations: AMD and OpenAI Partnership

A significant highlight of the recent launch was the partnership announcement between AMD and OpenAI. OpenAI’s CEO, Sam Altman, shared the stage with AMD’s Lisa Su, emphasizing the collaborative efforts that have gone into perfecting the MI400 chips. This partnership is indicative of the growing interdependence between AI developers and hardware manufacturers, focusing on creating systems that can support more sophisticated AI models and applications.

The involvement of OpenAI in the development of the MI400 chips offers AMD crucial insights into the performance requirements and optimization needs of cutting-edge AI applications. Such feedback has been instrumental in crafting a product that not only meets but exceeds industry standards. With AMD’s chips powering OpenAI’s future AI initiatives, both companies are poised to push the boundaries of what’s possible in machine learning and data processing.

Competing with Nvidia: AMD’s Strategic Positioning

AMD’s entrance into the competitive landscape of AI hardware presents a direct challenge to Nvidia, particularly with the launch of their MI400 chips. AMD’s strategy includes not just innovating their technology but also providing cost-effective solutions that could lure businesses away from Nvidia’s ecosystem. By capitalizing on lower operational costs, AMD aims to offer a compelling alternative without sacrificing performance.

The MI400 chips and the accompanying Helios system are designed to compete with Nvidia’s offerings, such as their Blackwell chips. With the expectation that AMD will under-price Nvidia’s products, companies that are heavily invested in AI research and development may find significant savings while still achieving high-performance outcomes. This approach can potentially alter market dynamics, especially as demands for AI technology continue to skyrocket.

The Future of AI Hardware: Advanced Capabilities of MI400 Series

AMD’s MI400 series stands out in the realm of AI hardware due to its advanced capabilities tailored for high-performance computing. The architecture supports sophisticated features that enhance execution frequencies and processing speeds, crucial for handling large datasets characteristic of AI training tasks. The chips’ ability to work within a unified rack system facilitates rapid data transfer and processing, which is essential for AI applications.

Moreover, the MI400’s design reflects AMD’s commitment to not only meet but exceed current market needs. With the anticipated proliferation of AI technologies across industries, the MI400 series is perfectly suited to address the inevitable demand for accelerated computing. This advancement positions AMD as a forward-thinking leader in AI hardware, fostering innovation and setting new industry benchmarks.

Market Impact: AMD’s Growth in the AI Sector

The increasing adoption of AMD’s AI chips among prominent clients underscores the company’s strengthening influence in the AI marketplace. With expectations that the AI chip sector will exceed $500 billion by 2028, AMD’s investments in acquisition and innovation have strategically placed it on the forefront of this growth. Rolling out products like the MI355X, AMD is demonstrating its ability to cater to diverse needs within the AI arena, enhancing its market presence.

This dynamic growth reflects a broader trend where companies seek advanced AI solutions that promise to deliver noticeable performance gains. AMD’s focus on cost-effectiveness, combined with cutting-edge technology, positions it favorably as organizations look to enhance their computing capabilities without incurring prohibitive costs. This advantage could be pivotal in winning over AI-driven enterprises seeking reliable partners in their quest for technological excellence.

Innovative Design: AMD’s Unified Rack-System Technology

The introduction of the Helios server rack alongside the Instinct MI400 chips showcases AMD’s innovative approach to AI hardware design. This unified rack-system architecture enables thousands of chips to operate as a cohesive unit rather than individual components, promoting efficient power usage and optimized performance. This level of integration is crucial for companies needing to scale their AI operations without compromising efficiency.

By reimagining how AI hardware is structured within data centers, AMD is setting a new standard in the industry. The MI400’s capability to seamlessly interconnect within the Helios system allows for unprecedented speeds in data processing and access, giving businesses a competitive edge in hyperscale computing environments. This forward-thinking design underscores AMD’s commitment to driving efficiency and efficacy within the rapidly expanding field of AI.

Strategic Acquisitions: Strengthening AMD’s AI Ecosystem

AMD’s proactive approach to expanding its capabilities includes the acquisition of 25 AI companies over the past year. These strategic investments are aimed at enhancing their workforce and capabilities in AI chip technology, centering around the development of powerful tools for the next generation of AI applications. By strengthening its ecosystem through acquisitions, AMD is positioned to leverage expertise that aligns with its vision of delivering advanced AI solutions.

This focus on enriching their company with fresh talent and innovative ideas is a pivotal movement in an industry that is evolving at a rapid pace. With the backing of a diverse portfolio in AI technology, AMD is expected to accelerate the development of its chip offerings, including future iterations beyond the MI400, ensuring that they remain competitive against formidable adversaries like Nvidia.

Cost-Effectiveness: AMD’s Competitive Edge Over Rivals

In the fiercely competitive landscape of AI chip manufacturing, AMD is carving out a niche by prioritizing cost-effectiveness. The price advantages associated with their MI400 series allow clients to minimize their operational expenditures while still benefiting from robust performance. By adopting a strategic pricing model that undercuts competitors like Nvidia, AMD is well-equipped to attract large clients seeking high-quality yet affordable AI solutions.

This focus on affordability without compromising performance is becoming increasingly appealing to organizations across various sectors. As businesses look to integrate AI more fully into their operations, the ability to obtain top-tier performance at lower costs is a critical factor. AMD’s commitment to delivering value is expected to resonate with clients as they seek to optimize their investments in AI technologies.

The Role of Feedback: Partnerships Driving Innovation in AI

The collaboration between AMD and OpenAI exemplifies how constructive feedback can drive innovation in technology development. Throughout the design process of the MI400 series, input from OpenAI regarding performance expectations has resulted in a product that truly meets the needs of AI developers. This partnership highlights the importance of client interaction in shaping future technologies, ensuring that the end products are both efficient and powerful.

Such collaborations are critical in an industry characterized by rapid advancement. AMD’s willingness to engage with leading AI organizations not only enhances the product’s quality but also fosters ongoing innovation, as insights gained can be reflected in future designs. This dynamic creates a feedback loop that continuously improves both AMD chips and the software solutions being built upon them, establishing a symbiotic relationship for mutual growth.

Frequently Asked Questions

What are AMD AI chips and what is the Instinct MI400 series?

AMD AI chips, particularly the Instinct MI400 series, represent the company’s next-generation offerings designed for high-performance AI computing. Launched by CEO Lisa Su, these chips will facilitate the creation of ‘rack-scale’ systems that optimize hyperscale computing for expansive data center operations.

How does AMD’s partnership with OpenAI impact the development of AI chips?

The partnership between AMD and OpenAI significantly influences the development of AMD AI chips like the Instinct MI400 series. OpenAI’s feedback during the design process has helped tailor these chips to meet the high demands of AI applications, enhancing performance and integration.

In what ways can the Instinct MI400 series enhance hyperscale computing?

The Instinct MI400 series is engineered to support hyperscale computing by allowing thousands of interconnected chips to function seamlessly within a single rack-scale system. This capability is crucial for clients seeking efficiency and scalability in AI workloads across data centers.

How does AMD plan to compete with Nvidia in the AI chip market?

AMD aims to compete with Nvidia by under-pricing their offerings, including the Instinct MI400 series, due to lower operational costs. This strategic pricing, coupled with technological advancements and strong collaborations like the one with OpenAI, positions AMD as a formidable competitor.

What advantages does the integration of AMD AI chips into server racks offer?

Integrating AMD AI chips, such as the Instinct MI400, into server racks like Helios provides significant advantages, including enhanced communication between chips for optimized performance and the capability to create robust rack-scale systems that meet the needs of large-scale AI applications.

What are the expected trends for the AI chip market with AMD’s entry?

The AI chip market, projected to exceed $500 billion by 2028, is expected to witness intensified competition with AMD’s entry through innovative products like the Instinct MI400 series. AMD’s strategic investments and partnerships, such as with OpenAI, are likely to accelerate market growth and diversify offerings.

What is the significance of AMD’s recent investments in AI companies?

AMD’s acquisition of 25 AI companies over the past year is significant for enhancing its capabilities in AI chip development. This investment strategy aims to strengthen AMD’s position in the AI market, enabling it to compete effectively with established players like Nvidia while advancing its product technology.

| Key Point | Details |

|---|---|

| Launch of AI Chips | AMD has unveiled the next-gen Instinct MI400 series AI chips. |

| Launch Event | The event held in San Jose, CA, featured CEO Lisa Su. |

| Collaboration with OpenAI | OpenAI’s CEO Sam Altman announced his company will adopt the MI400 chips. |

| Rack-scale Systems | The MI400 chips can create large, interconnected ‘rack-scale’ systems. |

| Competitive Edge | AMD aims to compete against Nvidia’s chips, emphasizing cost-effectiveness. |

| Partnership Insights | OpenAI’s feedback has been vital in MI400’s development, showing close collaboration. |

| Market Strategy | AMD has acquired 25 AI firms to enhance its market position. |

| Future Markets | The AI chip market is projected to exceed $500 billion by 2028. |

Summary

AMD AI chips are set to transform the AI landscape with the introduction of the next-generation Instinct MI400 series. This remarkable advancement showcases a commitment to high-performance computing tailored for large infrastructure needs. With the capitalized support from partnerships like that with OpenAI, AMD is poised to solidify its position in the competitive AI chip market, particularly as it aims to outprice its competitors through lower operational costs. This innovative strategy not only reflects AMD’s commitment to quality and performance but also highlights its vision to cater to the growing demand in the $500 billion AI chip market projected by 2028.