CFPB Funding Cuts: Implications of Trump’s Big Beautiful Bill

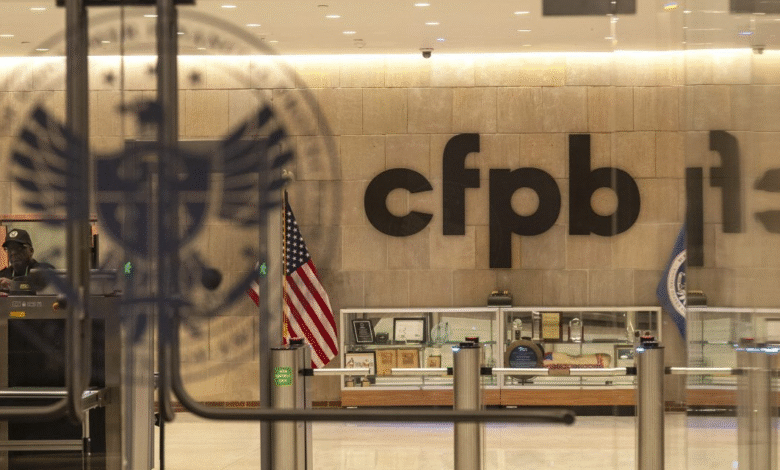

Recent changes to the Consumer Financial Protection Bureau (CFPB) have raised significant concerns about its future effectiveness, particularly with the cuts to its funding. In what has been termed President Trump’s “big beautiful bill,” the annual budget for the CFPB has been drastically reduced, dropping to just 6.5% of the Federal Reserve’s operating expenses. This marks the lowest funding level the agency has ever seen, prompting fears among consumer advocates about the potential weakening of oversight regarding financial entities. With such a significant CFPB funding cut, questions arise about how well the Bureau can enforce consumer protection laws and manage financial oversight issues effectively. As many Americans rely on the CFPB for safeguards against financial malpractice, these budget reductions could have far-reaching implications for consumer protection in the marketplace.

The recent severe budget cuts to the Consumer Financial Protection Bureau, often referred to as the CFPB, have sent shockwaves through the realm of financial consumer advocacy. This critical agency, tasked with overseeing banks and financial services to ensure compliance with consumer protection laws, now faces unprecedented challenges due to diminished resources. Many experts worry that with reduced funding, the CFPB will struggle to carry out its essential functions of monitoring financial firms and addressing consumer complaints effectively. This situation highlights broader financial oversight issues that could arise, jeopardizing the protection of consumers in an ever-evolving economic landscape. As the fiscal dynamics shift, understanding the implications of these funding changes becomes crucial for consumers and advocates alike.

Understanding the Impact of CFPB Funding Cuts

The recent funding cuts to the Consumer Financial Protection Bureau (CFPB) represent a significant shift in the landscape of consumer financial protection. With annual funding slashed to 6.5% of the Federal Reserve’s operating budget, down from 12%, the bureau faces its most challenging operational environment to date. These cuts raise critical concerns among consumer advocates about the CFPB’s ability to enforce consumer protection laws, thus putting millions of Americans at risk of exploitation by unsavory financial practices. The diminished budget is reminiscent of a ‘David versus Goliath’ scenario, as the agency grapples with the overwhelming power of large financial institutions at a time when it has fewer resources to confront them effectively.

This drastic budget reduction not only limits the CFPB’s capacity to monitor and regulate financial firms but also jeopardizes the relief that it has historically facilitated for consumers. For instance, since the CFPB’s inception, it has successfully recovered over $21 billion for consumers who suffered from financial misdeeds, a feat that necessitates substantial resources. With fewer funds, the bureau will likely struggle to maintain rigorous enforcement of consumer financial protection laws and manage public complaints efficiently, leading to potentially less-responsive consumer protection measures.

Frequently Asked Questions

What are the implications of CFPB funding cuts under Trump’s big beautiful bill?

The CFPB funding cuts introduced by Trump’s ‘big beautiful bill’ significantly reduce the agency’s budget, limiting its capacity to enforce consumer protection laws. Advocacy groups warn that this will weaken oversight of financial firms and has the potential to harm consumers by restricting the bureau’s ability to investigate and address financial oversight issues.

How will the CFPB budget reduction impact consumers?

The budget reduction for the Consumer Financial Protection Bureau (CFPB) may lead to less rigorous enforcement of consumer protection laws. With a decreased budget, the CFPB may struggle to adequately supervise financial firms, potentially increasing risks for consumers who rely on the bureau for financial oversight.

What was the CFPB funding cap prior to the recent cuts?

Prior to the funding cuts, the CFPB’s budget was capped at 12% of the Federal Reserve’s operating expenses, which provided it with a funding level that allowed it to effectively fulfill its mission of protecting consumers. The recent cuts reduce this cap to 6.5%, significantly impacting the bureau’s operational capacity.

Why was the CFPB created and how do funding cuts affect its mission?

The CFPB was established in response to the 2008 financial crisis to oversee consumer financial protection. With the recent funding cuts, the CFPB may face challenges in carrying out its mission effectively, including enforcement actions against financial firms and addressing consumer complaints.

What effects might the CFPB funding cuts have on financial oversight?

The CFPB funding cuts could diminish financial oversight by limiting the bureau’s resources for monitoring and regulating financial institutions. This may result in less accountability for financial firms, potentially leading to increased consumer harm.

How does CFPB funding differ from other federal agencies?

Unlike most federal agencies, the CFPB is funded through a mechanism tied to the Federal Reserve rather than congressional appropriations. This unique structure was designed to shield it from political interference, but the recent budget cuts have compromised its financial security and ability to function effectively as a consumer watchdog.

What actions have consumer advocates taken in response to CFPB funding cuts?

Consumer advocates have expressed strong opposition to the CFPB funding cuts, emphasizing that a reduced budget will hinder the agency’s ability to protect consumers and enforce financial laws. They have organized rallies and campaigns to raise awareness of the potential negative impacts of these cuts on consumer rights.

How has the CFPB’s budget evolved over the years leading up to these cuts?

The CFPB’s budget has increased from $598 million in 2013 to a cap of $823 million for the fiscal year 2025, but the recent cuts reduced this to approximately $446 million, marking a significant decrease in its operational resources. This shift raises concerns about the agency’s future effectiveness.

What alternatives to the CFPB exist for consumer financial protection?

While the CFPB is a primary agency dedicated to consumer financial protection, there are other regulatory bodies like bank regulators and the Federal Trade Commission (FTC) that provide oversight. However, the fragmentation of oversight can lead to gaps in consumer protection, particularly without the comprehensive role that the CFPB was designed to fulfill.

What potential future changes could occur in the CFPB following funding cuts?

With reduced funding, the CFPB may face pressure to scale back its operations and limit its regulatory reach. Future administrations might restore funding levels, but the current cuts raise concerns about the agency’s long-term ability to fulfill its oversight mission and enforce consumer protection laws.

| Key Point | Details |

|---|---|

| CFPB Funding Cuts | The signing of the ‘big beautiful bill’ by President Trump resulted in nearly a 50% reduction in funding for the CFPB. |

| Funding Percentage Change | CFPB’s funding will drop from 12% to 6.5% of Federal Reserve’s annual expenses, marking the lowest funding in the agency’s history. |

| Impact on Consumer Protection | Consumer advocates express concerns that reduced funding will impair the CFPB’s capacity to oversee financial firms and enforce regulations. |

| Historical Context | CFPB was established after the 2008 financial crisis to protect consumers. This budget cut may challenge its effectiveness in regulating major financial institutions. |

| Budget Cap Details | The cap for the CFPB’s funding for 2025 is set at $823 million, with the new limit potentially reducing the actual funding to approx. $446 million. |

Summary

CFPB funding cuts significantly impact the Consumer Financial Protection Bureau’s operations. The recent legislation signed by President Trump halves the agency’s budget, raising alarms among consumer advocates about the potential decrease in oversight and enforcement of financial consumer protections. With reduced resources, the CFPB may struggle to fulfill its mission to safeguard consumers from malpractices in the financial industry.